|

Abstract

Download

Citation

@misc{liu2019furnishing, title={Furnishing Your Room by What You See: An End-to-End Furniture Set Retrieval Framework with Rich Annotated Benchmark Dataset}, author={Bingyuan Liu and Jiantao Zhang and Xiaoting Zhang and Wei Zhang and Chuanhui Yu and Yuan Zhou}, year={2019}, eprint={1911.09299}, archivePrefix={arXiv}, primaryClass={cs.CV} }

DeepFurniture Dataset

|

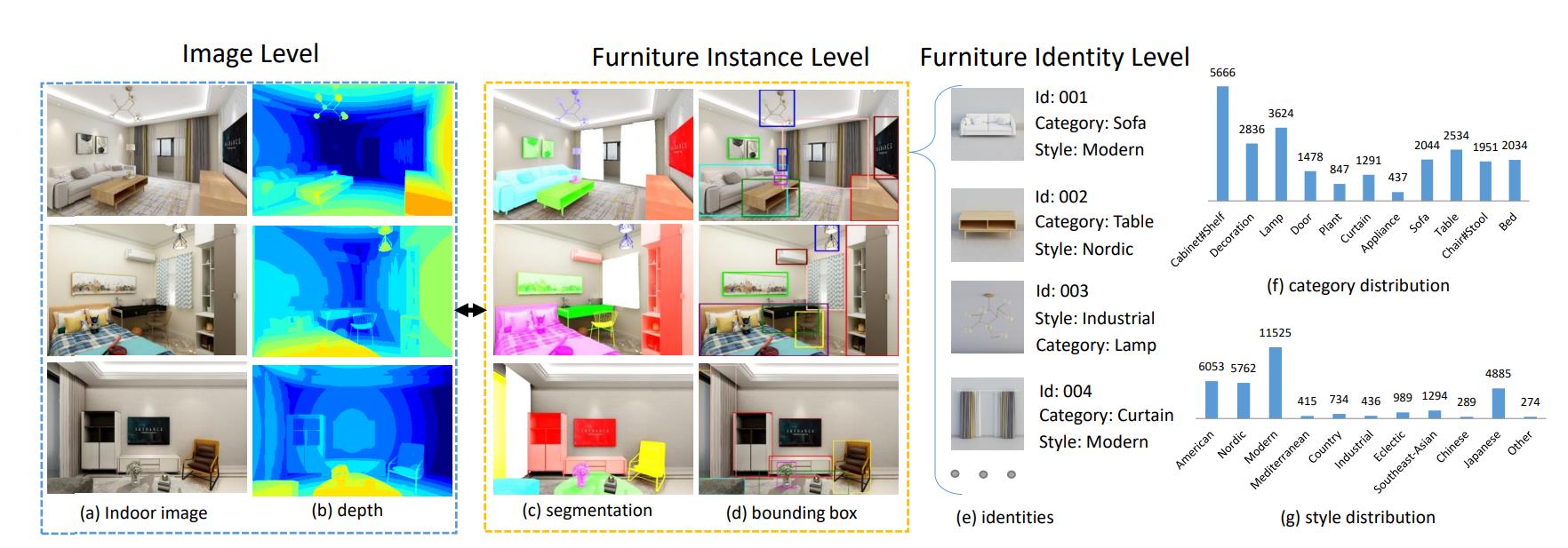

The DeepFurniture dataset contains hierarchical annotations, i.e., image level, instance level and identity level. Image level data provides interior scene and its depth information, instance level data contains the segmentaion and bounding box information for every furniture instance on the interior image, and you can find their category, style and other informations using the identity level data.

This dataset contains 24k indoor images, 170k furniture instances and 20k unique furniture identities. To the best of out knowledge, DeepFurniture is a large-scale furniture database with the richest annotations for a versatile furniture understanding benchmark.

Furniture Set Retrieval Framework

|

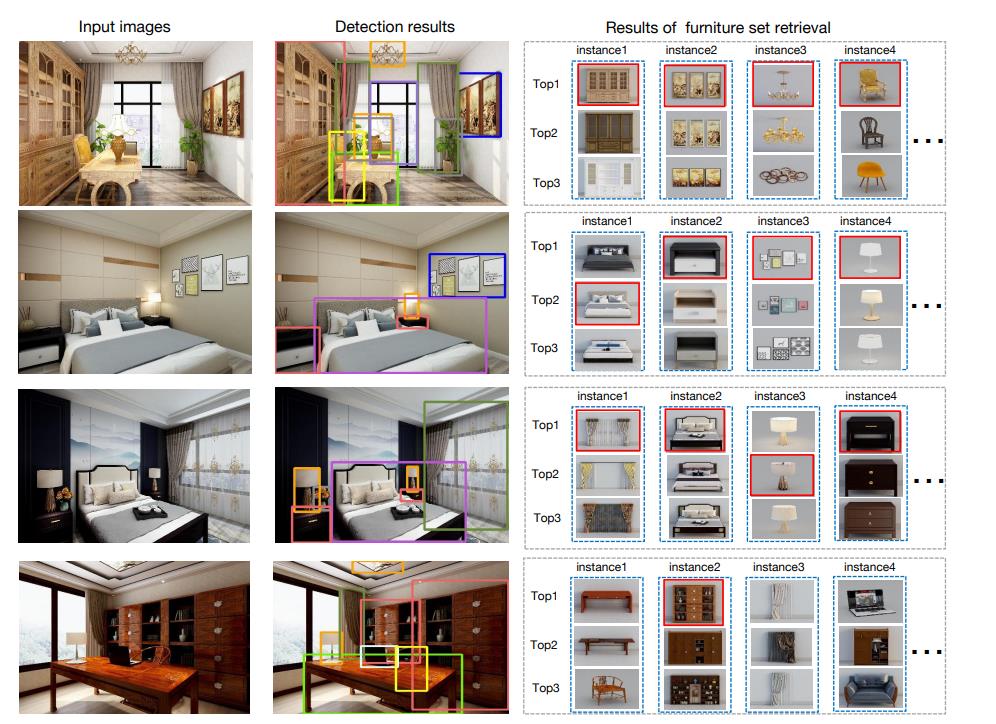

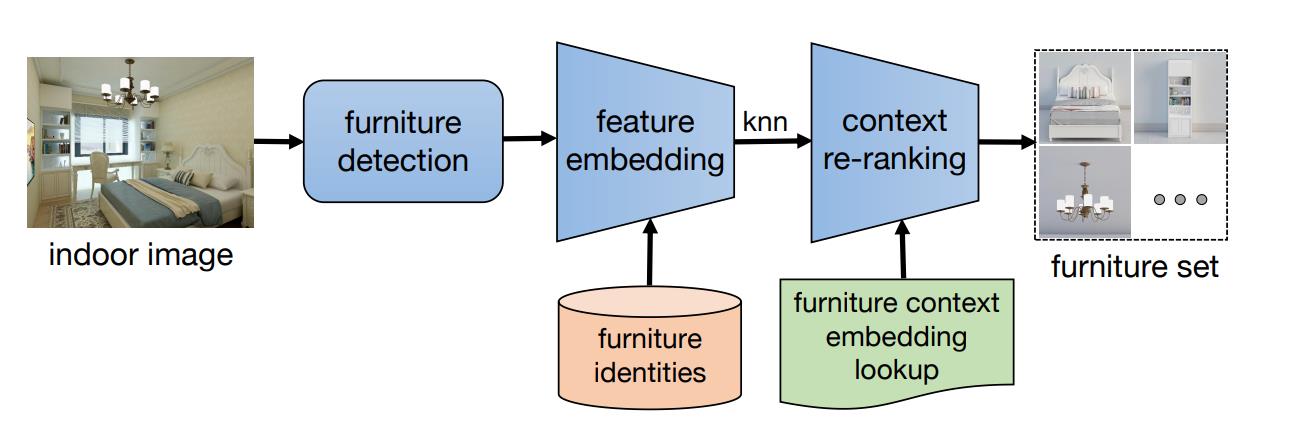

We propose a feature and context embedding based framework, which contains 3 major contributions: (A) An improved Mask-RCNN model with an additional mask-based classifier is introduced for better utilizing the segmentation information to relieve the occlusion problems in furniture detection context. (B) A multi-task style Siamese network is proposed to train the feature embedding model for retrieval, which is composed of a classification subnet supervised by self-clustered pseudo attributes and a verification subnet to estimate whether the input pair is matched. (C) In order to model the relationship of the furniture entities in an interior design, a context embedding model is employed to re-rank the retrieval results. Extensive experiments demonstrate the effectiveness of each module and the overall system.

Acknowledgements

This project is supported by the Exabrain Team of Kujiale.com. We highly appreciate some other members for their technical support and insightful comments. We also thank the artists and professionals for their great efforts into editing and labelling millions of models and scenes.